Facebook

Facebook

Pointing out that suicides happen every 40 seconds and are the second leading cause of death for young people, Facebook has unveiled new tools to help prevent them. While it already has self-harm prevention features, they rely users to spot and report friends' problematic posts. The company is now testing AI tech that can detect whether a post "is very likely to include thoughts of suicide." It can then be checked by the company's Community Operations teams, opening up a new way for worrying posts to be discovered.

Facebook's AI is also helping users report friends' problematic posts. Using pattern recognition, it will make "suicide or self injury" reporting options more prominent so that a user's friends can find them easily. Facebook said, however, that the AI detection and reporting options, either by friends or Facebook employees, is just a "limited test" in the US for now.

Facebook has also created new Messenger tools in collaboration with the Crisis Text Line, the National Eating Disorder Association, the National Suicide Prevention Lifeline and other organizations. That'll let at-risk users or concerned friends contact knowledgeable groups over chat either directly from the organization's page or via Facebook's suicide prevention tools. The Messenger program is also in the testing phases, but Facebook will expand it "over the next several months" so that organizations can ramp up to increased call volumes.

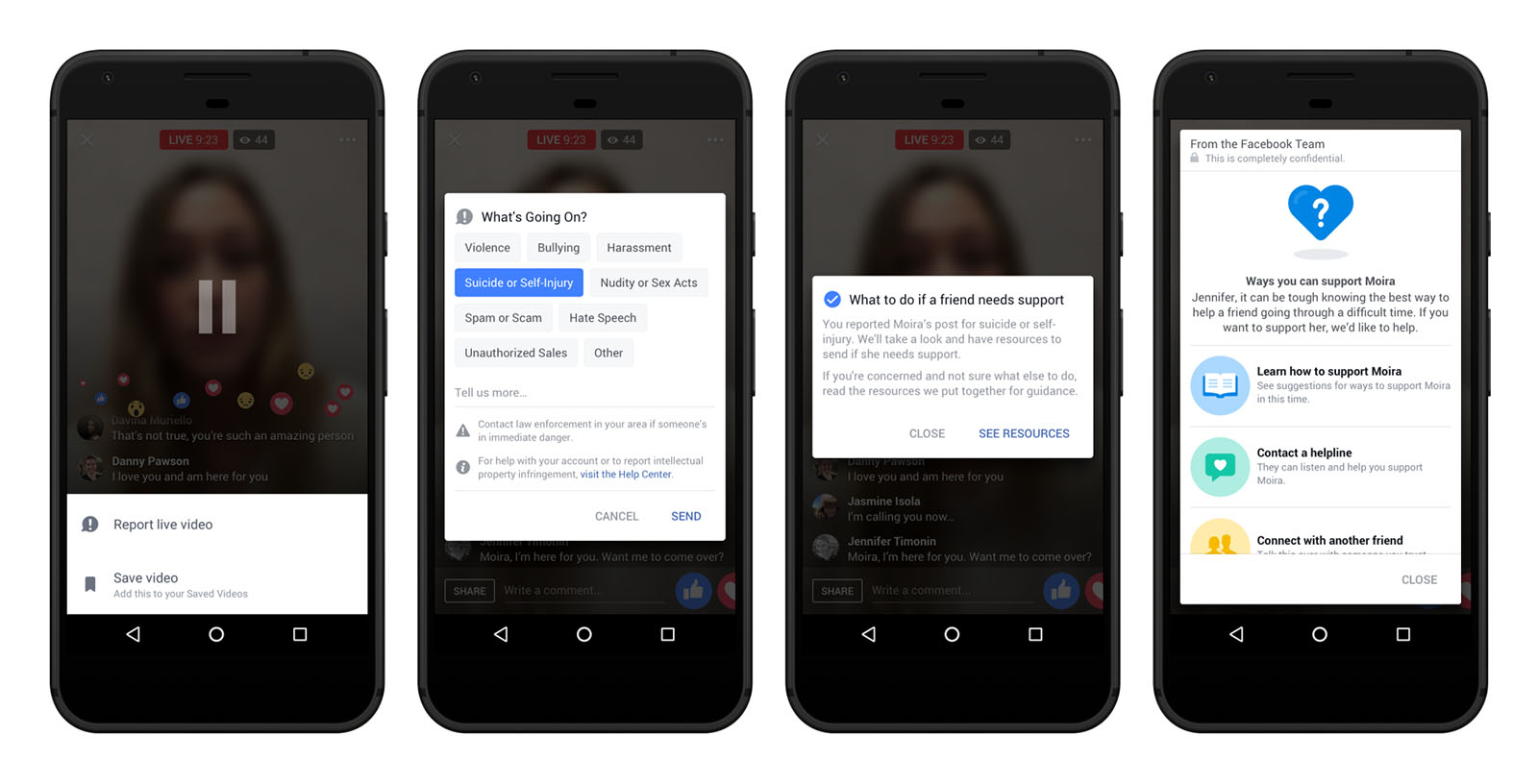

Finally, the social network has integrated suicide prevention tools into Facebook Live. If users see a troubling live post, they can reach out directly to the person and report it to Facebook at the same time, as shown above. It will "also provide resources to the person reporting the live video to assist them in helping their friend," it wrote. Meanwhile, the person sharing the video will see resources that let them reach out to a friend, contact a help line or see tips.

"Some might say we should cut off the livestream, but what we've learned is cutting off the stream too early could remove the opportunity for that person to receive help," Facebook Researcher Jennifer Guadagno told Techcrunch.